The following time you’re due for a medical examination you could get a name from somebody like Ana: a pleasant voice that may enable you put together in your appointment and reply any urgent questions you may need.

Along with her calm, heat demeanor, Ana has been educated to place sufferers comfy — like many nurses throughout the U.S. However not like them, she can also be out there to speak 24-7, in a number of languages, from Hindi to Haitian Creole.

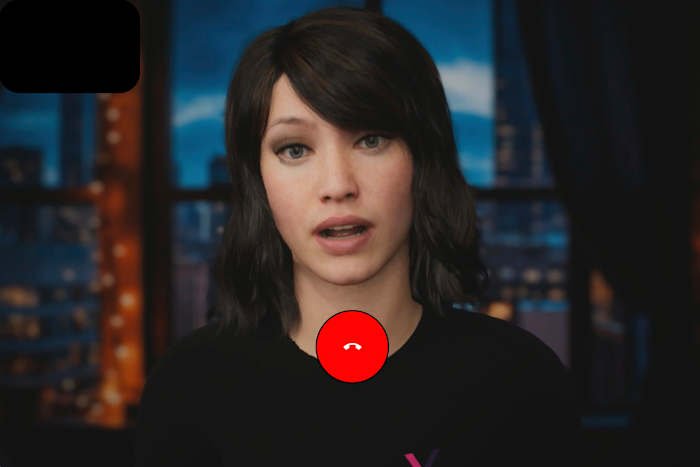

That’s as a result of Ana isn’t human, however an artificial intelligence program created by Hippocratic AI, one in all a variety of new companies providing methods to automate time-consuming duties often carried out by nurses and medical assistants.

It’s probably the most seen signal of AI’s inroads into health care, the place lots of of hospitals are utilizing increasingly sophisticated computer programs to observe sufferers’ very important indicators, flag emergency conditions and set off step-by-step motion plans for care — jobs that had been all beforehand dealt with by nurses and different well being professionals.

Hospitals say AI helps their nurses work extra effectively whereas addressing burnout and understaffing. However nursing unions argue that this poorly understood expertise is overriding nurses’ experience and degrading the standard of care sufferers obtain.

“Hospitals have been ready for the second once they have one thing that seems to have sufficient legitimacy to switch nurses,” stated Michelle Mahon of Nationwide Nurses United. “All the ecosystem is designed to automate, de-skill and finally exchange caregivers.”

Mahon’s group, the most important nursing union within the U.S., has helped manage greater than 20 demonstrations at hospitals throughout the nation, pushing for the proper to have say in how AI can be utilized — and safety from self-discipline in the event that they resolve to ignore automated recommendation. The group raised new alarms in January when Robert F. Kennedy Jr., the incoming well being secretary, urged AI nurses “pretty much as good as any physician” might assist ship care in rural areas. On Friday, Dr. Mehmet Oz, who’s been nominated to supervise Medicare and Medicaid, stated he believes AI can “liberate docs and nurses from all of the paperwork.”

Hippocratic AI initially promoted a charge of $9 an hour for its AI assistants, in contrast with about $40 an hour for a registered nurse. It has since dropped that language, as an alternative touting its providers and in search of to guarantee prospects that they’ve been fastidiously examined. The corporate didn’t grant requests for an interview.

AI within the hospital can generate false alarms and harmful recommendation

Hospitals have been experimenting for years with expertise designed to enhance care and streamline prices, together with sensors, microphones and motion-sensing cameras. Now that knowledge is being linked with digital medical data and analyzed in an effort to foretell medical issues and direct nurses’ care — typically earlier than they’ve evaluated the affected person themselves.

Adam Hart was working within the emergency room at Dignity Well being in Henderson, Nevada, when the hospital’s pc system flagged a newly arrived affected person for sepsis, a life-threatening response to an infection. Beneath the hospital’s protocol, he was supposed to right away administer a big dose of IV fluids. However after additional examination, Hart decided that he was treating a dialysis affected person, or somebody with kidney failure. Such sufferers must be fastidiously managed to keep away from overloading their kidneys with fluid.

Hart raised his concern with the supervising nurse however was informed to only observe the usual protocol. Solely after a close-by doctor intervened did the affected person as an alternative start to obtain a sluggish infusion of IV fluids.

“You’ll want to hold your considering cap on— that’s why you’re being paid as a nurse,” Hart stated. “Turning over our thought processes to those units is reckless and harmful.”

Hart and different nurses say they perceive the objective of AI: to make it simpler for nurses to observe a number of sufferers and shortly reply to issues. However the actuality is commonly a barrage of false alarms, typically erroneously flagging fundamental bodily capabilities — equivalent to a affected person having a bowel motion — as an emergency.

“You’re making an attempt to focus in your work however then you definitely’re getting all these distracting alerts which will or might not imply one thing,” stated Melissa Beebe, a most cancers nurse at UC Davis Medical Middle in Sacramento. “It’s laborious to even inform when it’s correct and when it’s not as a result of there are such a lot of false alarms.”

Can AI assist in the hospital?

Even probably the most refined expertise will miss will miss indicators that nurses routinely decide up on, equivalent to facial expressions and odors, notes Michelle Collins, dean of Loyola College’s School of Nursing. However folks aren’t good both.

“It might be silly to show our again on this fully,” Collins stated. “We must always embrace what it might probably do to reinforce our care, however we also needs to watch out it doesn’t exchange the human factor.”

Greater than 100,000 nurses left the workforce in the course of the COVID-19 pandemic, in response to one estimate, the largest staffing drop in 40 years. Because the U.S. inhabitants ages and nurses retire, the U.S. authorities estimates there shall be greater than 190,000 new openings for nurses yearly by way of 2032.

Confronted with this development, hospital directors see AI filling a significant position: not taking on care, however serving to nurses and docs collect data and talk with sufferers.

‘Typically they’re speaking to a human and typically they’re not’

On the College of Arkansas Medical Sciences in Little Rock, staffers have to make lots of of calls each week to organize sufferers for surgical procedure. Nurses affirm details about prescriptions, coronary heart situations and different points — like sleep apnea — that have to be fastidiously reviewed earlier than anesthesia.

The issue: many sufferers solely reply their telephones within the night, often between dinner and their youngsters’s bedtime.

“So what we have to do is discover a strategy to name a number of hundred folks in a 120-minute window — however I actually don’t wish to pay my employees extra time to take action,” stated Dr. Joseph Sanford, who oversees the middle’s well being IT.

Since January, the hospital has used an AI assistant from Qventus to contact sufferers and well being suppliers, ship and obtain medical data and summarize their contents for human staffers. Qventus says 115 hospitals are utilizing its expertise, which goals to spice up hospital earnings by way of faster surgical turnarounds, fewer cancellations and diminished burnout.

Every name begins with this system figuring out itself as an AI assistant.

“We all the time wish to be totally clear with our sufferers that typically they’re speaking to a human and typically they’re not,” Sanford stated.

Whereas corporations like Qventus are offering an administrative service, different AI builders see an even bigger position for his or her expertise.

Israeli startup Xoltar makes a speciality of humanlike avatars that conduct video calls with sufferers. The corporate is working with the Mayo Clinic on an AI assistant that teaches sufferers cognitive methods for managing continual ache. The corporate can also be growing an avatar to assist people who smoke give up. In early testing, sufferers have spent about 14 minutes speaking to this system, which may pickup on facial expressions, physique language and different cues, in response to Xoltar.

Nursing specialists who research AI say such packages may match for people who find themselves comparatively wholesome and proactive about their care. However that’s not most individuals within the well being system.

“It’s the very sick who’re taking over the majority of well being care within the U.S. and whether or not or not chatbots are positioned for these of us is one thing we actually have to contemplate,” stated Roschelle Fritz of the College of California Davis Faculty of Nursing.

___

The Related Press Well being and Science Division receives help from the Howard Hughes Medical Institute’s Science and Instructional Media Group and the Robert Wooden Johnson Basis. The AP is solely answerable for all content material.

Copyright 2025 The Related Press. All rights reserved. This materials is probably not revealed, broadcast, rewritten or redistributed with out permission.